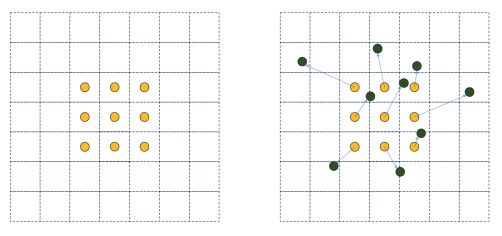

We introduce a novel approach to video frame interpolation, a key process in video processing. We propose the use of flow-guided deformable convolution as an alternative to traditional warping techniques based on optical flow. This innovative method integrates the flexibility and adaptability of deformable convolutions with the motion information provided by optical flow, resulting in more accurate and reliable frame interpolation. Our method diverges from traditional techniques that exclusively gather pixel information from specific points indicated by optical flow. Instead, our approach acquires a weighted sum of neighboring pixel information through learnable offsets directed by optical flow patterns. This provides a more flexible way to fetch pixel data, even when the accuracy of the optical flow is compromised. These findings suggest promising implications for real-world applications such as video compression and slow-motion video creation without needing high-performance cameras. Our study represents an important step forward in improving the quality and efficiency of video processing tasks through innovative convolution techniques.

Published at International Conference on Electronics, Information, and Communication (ICEIC 2024), Jan. 2024